Automate Toggling Pipeline Triggers During Office Hours…a Weird Limitation and Solution in Azure Data Factory

Preface:

This week, an unusual request landed on our desks, prompting me to share a brief highlight on TDAD. I want to give a special shout-out to my colleague Elisabeth Henhapl for devising a solution that, in my opinion, ingeniously leveraged existing Data Platform components. This not only offered a swift resolution in our environment but also stands out as a testament to creative problem-solving. This post marks the inaugural ‘Developer Spotlight,’ where I showcase the inventive and resourceful Data Platform solutions crafted by members of my developer circle. I trust you’ll find these insights valuable!

Issue:

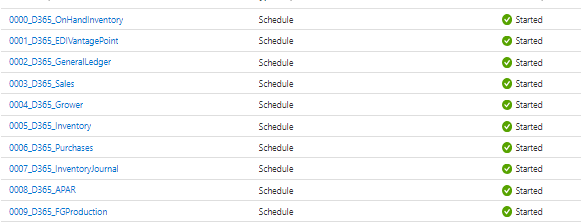

At present, our team is actively engaged in developing a BI data platform with a primary focus on implementing an ‘Export to Data Lake’ solution for Dynamics 365 F&SC. (For additional information on D365 data platform builds, please refer to the relevant this section of the Blog Page.) In brief, our batch processing is organized around various Modules such as Sales, Financials, Inventory Movement, each equipped with distinct triggers.

Currently we are processing our Sales Batch Fact and Dimension Tables hourly. Sales is a fairly complex data model comprised of ~60 high data volume base tables that are processed from the Data Lake Files, to the federated Data Warehouse, and finally to a couple reporting optimized data marts.

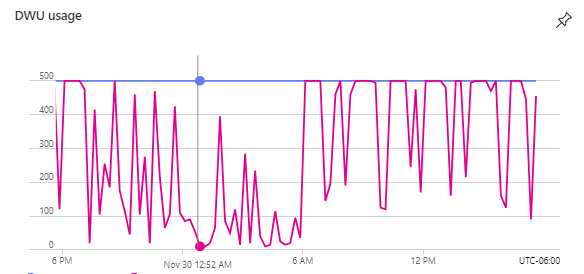

Due to this high data volume, this process can run long during high module processing times. This is mainly attributed to the capacity being reached in our Dedicated SQL pool where our warehouse lives:

Based on the visual above, we see that we are maxing out our capacity several times a day given the existing size. Our team has put in a request to get the capacity increased, but there is some red tape that needs to be traversed before we can get sizing increased. Due to the current size, our sales module began to overlap when the dedicated pool is maxed out during business hours causing data integrity issues in our federated Data Warehouse tables.

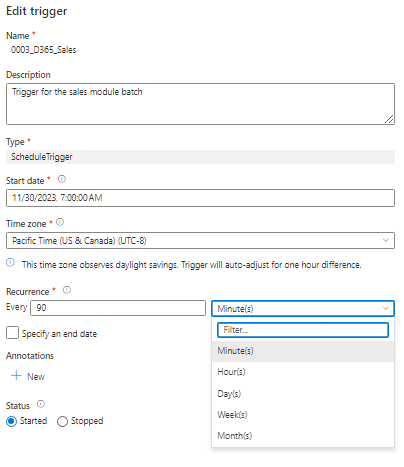

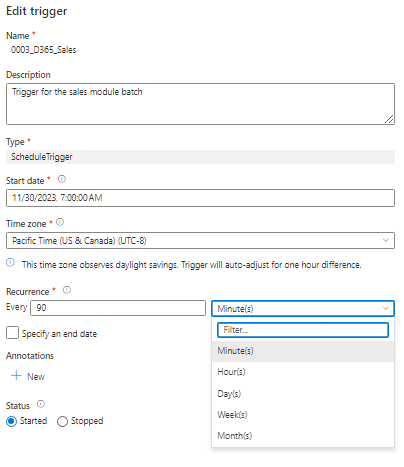

It was determined by project stakeholders that, while we wait for our scaling request to pass through the cloud governance board, we should decrease the run frequency during business hours from running every hour, to running every 90 minutes. At the surface this seems like a very straight forward request, just add an additional 30 minute stagger to the trigger. So we agreed that would work and reporting users were notified of the temporary degradation of SLAs.

So as we hopped in to make the 90 minute change, we were surprised to find that ADF/Synapse Pipelines did not support trigger configuration for specific times. You can either specify specific time intervals that run 24/7 or specify triggers during business hours that share the same minute classification.

Due to the fact that we cannot run process jobs 24/7 due to Dedicated Pool Maintenance and Validation Jobs running around 2AM and limited cost benefit to doing so, the standard 90 minute option was out. We also couldn’t leverage the advanced options, because they don’t support the rolling 90 minutes during office hours.

So we were in a tough spot, stakeholders had been notified of 30 minute delay to their SLAs, but there wasn’t a straightforward way to configure that natively in the portal.

Research:

So like all great developers do, it was time to turn to our old friend the internet to see if someone had solved for this issue before. Turns out, a lot of folks shared in our frustration. Many people had said it would be nice to have some mechanism to toggle pipelines on and off during business hours for maintenance tasks that generally occur overnight.

Based on our research, there was no way to accomplish this using the UI in Azure Synapse.

The Solution:

Then Elisabeth had a great idea…

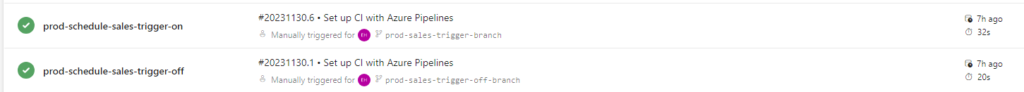

We already have a process that toggles triggers on and off for our code promotion via Azure DevOps. We toggle triggers off, so no pipelines are running while code changes are made to the Dedicated SQL Pool and the Synapse Workspace in production, and then turn them on again upon the completion of the release pipelines.

Would it be a viable option to turn triggers on and off during maintenance hours using Azure DevOps?

Turns out yes!

prod-schedule-sales-trigger-off code example:

trigger:

- none

schedules:

- cron: "45 0 * * *" # Specify time in UTC -- 4:45 PM PST, which is 0:45 AM UTC

branches:

include:

- '*'

pool:

vmImage: windows-latest

steps:

- checkout: self

- task: toggle-triggers-dev@2

inputs:

azureSubscription: '[azure subscription placeholder]'

ResourceGroupName: '[Azure Synapse Resource Group Name]'

WorkspaceName: '[Azure Synapse Workspace Name]'

ToggleOn: false

Triggers: '0003_D365_Sales' #trigger name prod-schedule-sales-trigger-on code example:

trigger:

- none

schedules:

- cron: "45 0 * * *" # Specify time in UTC -- 4:45 PM PST, which is 0:45 AM UTC

branches:

include:

- '*'

pool:

vmImage: windows-latest

steps:

- checkout: self

- task: toggle-triggers-dev@2

inputs:

azureSubscription: '[azure subscription placeholder]'

ResourceGroupName: '[Azure Synapse Resource Group Name]'

WorkspaceName: '[Azure Synapse Workspace Name]'

ToggleOn: true

Triggers: '0003_D365_Sales' #trigger name By scheduling these pipelines in Azure DevOps we were able to configure our sales trigger as such:

And let the CI/CD PowerShell handle turning on and off the trigger when we have scheduled maintenance and lower usage!

This is a great example of thinking outside the box when tackling a seemingly unsolvable problem 🙂 Great work Elisabeth! Hopefully some kind of functionality for business hour triggers becomes possible in ADF in Fabric as it seems that there is a lot of demand from users around this topic!

But until that day comes, hopefully this was helpful in providing a possible solution for you at your organization. What do you think? Is there another way you would’ve tackled this problem? Let me know in the comments!